Today, augmented reality is supported by a lot of phones and tablets, so in many cases, the barrier is not the hardware, but rather the data. If we want to do more than simply view random models, we need data that makes sense in the context of the real world. Geospatial data is a perfect example of data with local context – it only makes sense for the area it was created for. Wouldn’t it be great to see data where it belongs?

Spatial data already “knows” its proper location in the world. All it needs is to be transformed from wherever it is to a format supported by an AR device.

In this blog, I am going to talk about location-based augmented reality, including why it can be useful to augment the world with real data, and how it can work with FME.

See also: 5 Ways to View Your Data in Augmented Reality (with Examples!)

The Power of Spatial Data and AR

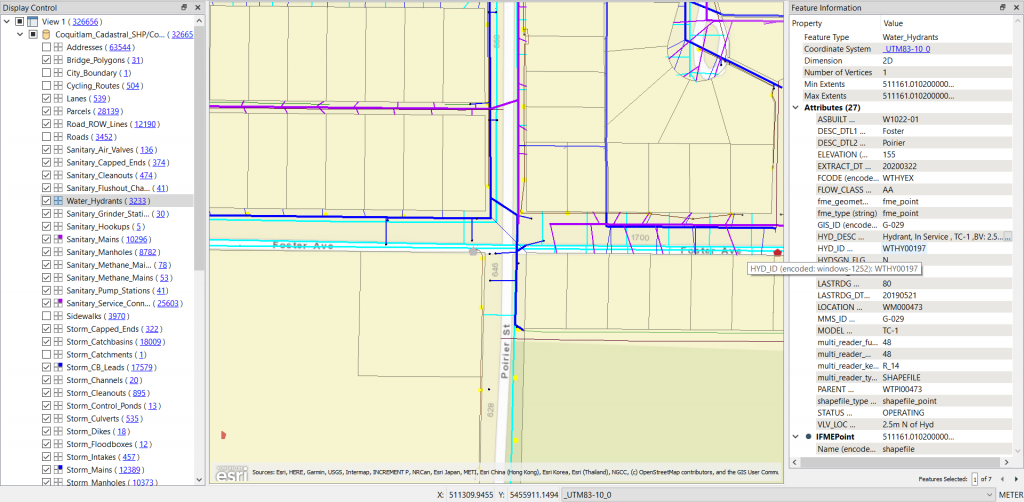

A lot of organizations have huge volumes of geospatial data and use it on a daily basis – for visualization of city assets, for maintenance, analysis and many other purposes. But how do we see this spatial data? Usually, on our screens – our workstations, laptops, maybe mobile devices, or maybe even on paper (despite the fact that we already live in the third millennium for 20 years). Maps are great (I, as a cartographer by training, love maps) but what they are lacking is context of the real world. Look at this map showing the underground utilities at some intersection in the City of Coquitlam, British Columbia:

We certainly can understand what assets we have here, where the pipes are located and where they go into the properties, but we don’t get the whole picture of this particular place. Now compare it with this image:

This is the same location, and the same dataset. Here, we can see a much larger picture – this is a truly 1-to-1 scale.

We get the full context for our data and the feel of this neighbourhood, from its buildings and trees to occasional bicyclists and cars passing by. We can walk around and inspect the objects where they belong even if they are hidden underground, all through the lenses of our mobile devices. We can annotate the scene and get more information about its features – when this pole was painted, what species is this tree, and how old is this house. We can even visualize invisible objects, events, processes, or conditions, for example, air quality!

The best part is, these data models can be up-to-date to the second. There is no need to convert anything ahead of time and no need to pre-load the chunks of data to a phone before going to the field.

How Location-Based Augmented Reality Works

Probably the most famous example of location-based AR was Pokémon Go, which became the most popular mobile game of 2016. It raised the interest in augmented reality to a global scale – with over a billion downloads, this game made AR feel simple and approachable.

Most AR apps are not aware of their geolocation. They simply scan their surroundings for surfaces, and after a user taps on the surface, the app places the model on the selected surface. How can we place a model at a particular location in the world? Well, one way to do it is to add a geographic anchor to the model (a point in the model with the known geographic coordinates) and make sure that the model orientation is correct (the Y axis is pointing to the North). And if we come into the vicinity of this location, the app, which can constantly track the current device location with a built-in GPS , “will know” how to show the model correctly.

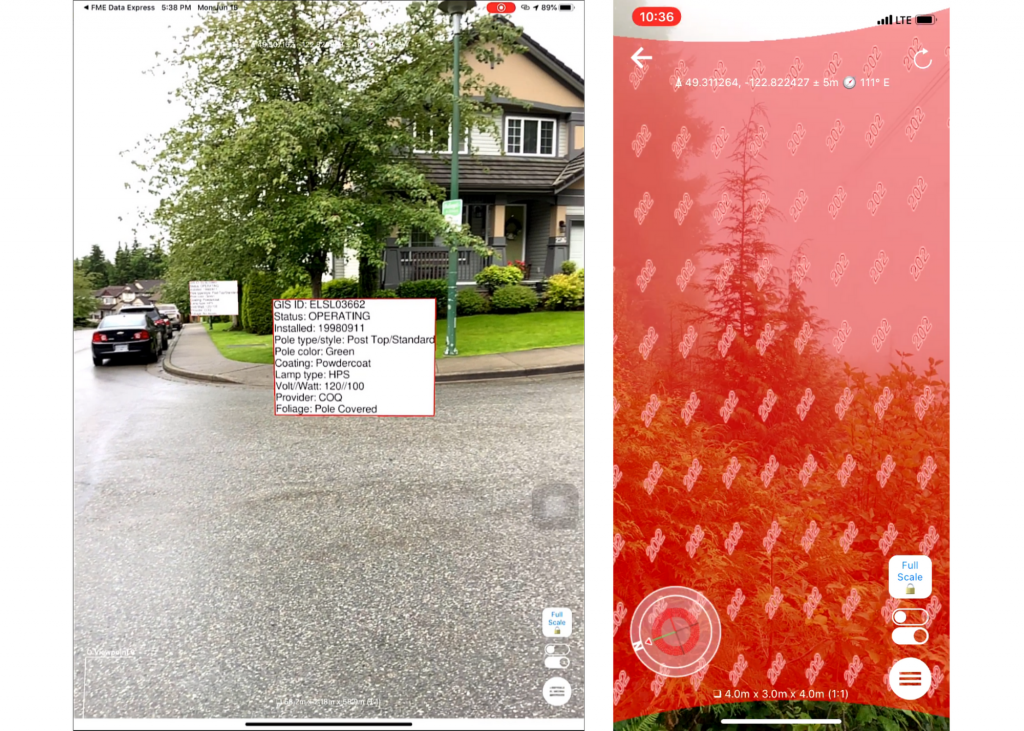

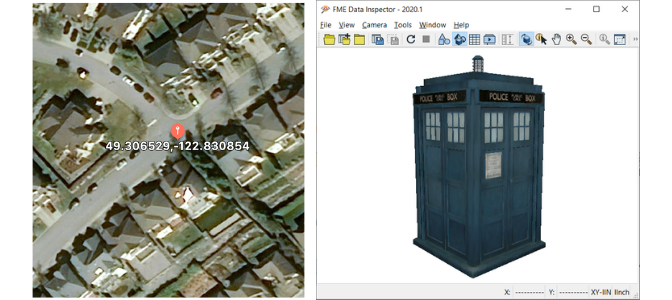

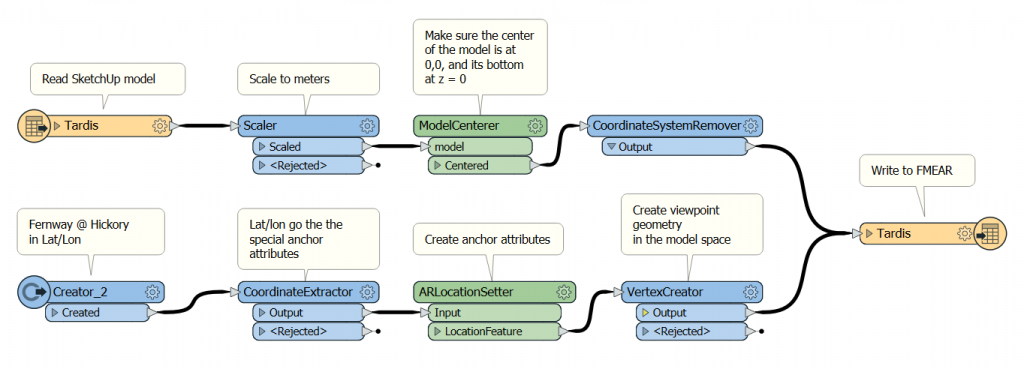

Here is a simple example where I place a SketchUp model downloaded from Trimble 3DWarehouse at an intersection near my house using FME Desktop.

I measured the geographic coordinates of the intersection (you can use your phone or any online mapping system!) and created an anchor point representing this location. I also created the local coordinates for the same point at 0, 0. This means my model will have its center at the specified latitude and longitude. In my second step, I made sure that my model was scaled to meters and also centered at 0, 0. The last step was the generation of an AR file, which contained both the anchor and the model.

Now I can go outside and open the model with the FME AR app. It sits in the middle of the intersection, exactly where I expected to see it. Well, exactly to the precision of the GPS.

There are other methods of georeferencing augmented reality models in the world, such as using WiFi coverage, which can be used for indoor navigation, and visual localization, where the images from the camera are matched to a pre-existing landmark database.

Using Cloud to View Real-time AR Models

The method described above works well only for georeferenced models with fixed content. What if we need to see the data in real-time? What if we don’t know where we will need this most up-to-date data? For example, if a mobile team needs to respond to a call from a resident?

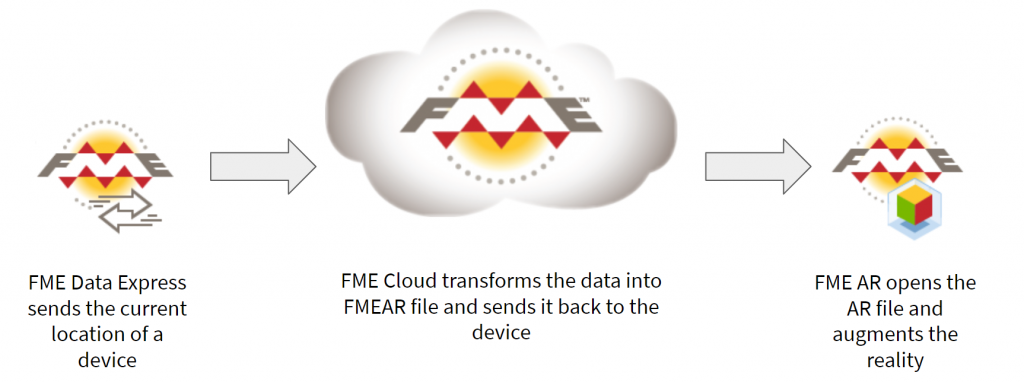

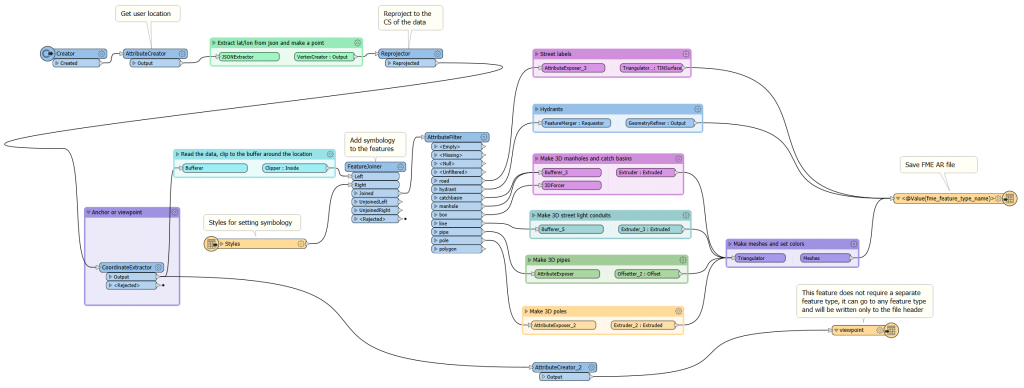

The answer is easy – we generate an AR model from the original data source only when it is requested and only for the location where it is needed. How? We used four components:

- FME Desktop for creating a transformation of the existing data to an AR model.

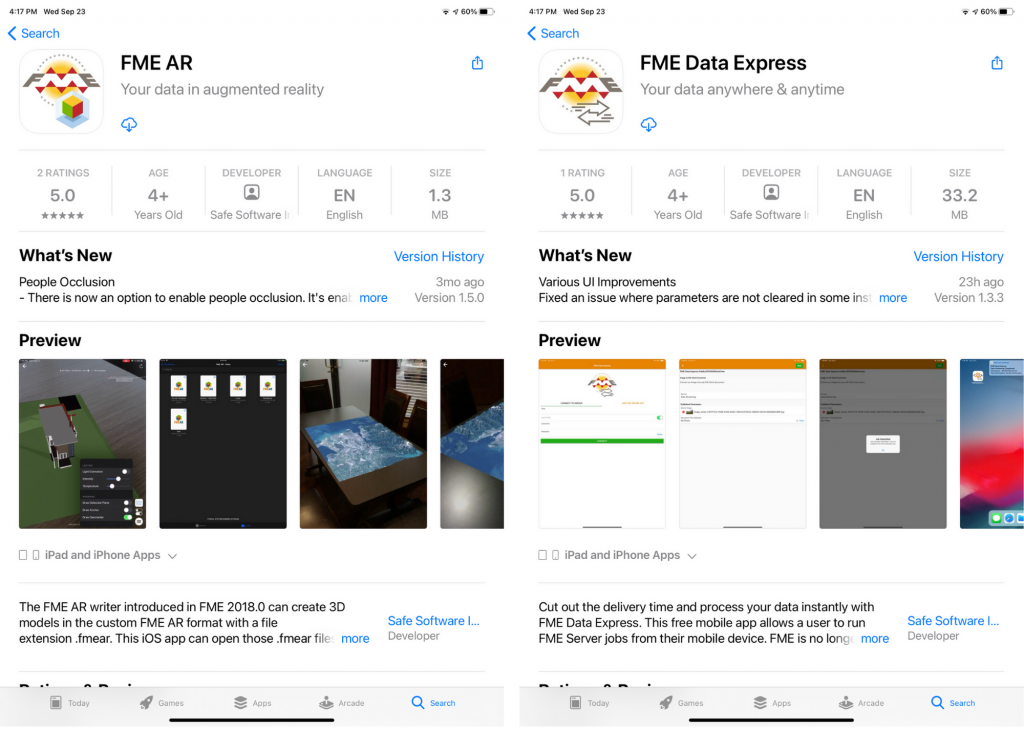

- FME Data Express for submitting requests for model generation using device location to the cloud

- FME Cloud (a hosted version of FME Server) for creating models upon request

- FME AR to show them.

Step 1. Define the transformation

Creating an AR model is no different than any other FME transformation – we create a workspace with FME Desktop, where we bring in our data sources in whatever formats they are, then we transform them into a 3D model. Some steps may involve scaling because AR format works with meters, replacing 2D geometries such as points, lines and polygons with 3D geometries – extrusions, solids, models, and styling.

For example, instead of points representing fire hydrants, we can use a SketchUp model of a hydrant – now wherever the source dataset has hydrant points, the output dataset will have 3D hydrant geometries. The pipes depicted with lines can be buffered in 3D or replaced with oriented cylinders according to their real size, and so on.

We also need a point with a known geographic location. It will come from the users in the field when they submit their current location. We will buffer this point to define an area, which we will extract from our source data. This way, we only need to transform a tiny portion of the entire dataset, say within 50 meters around our position.

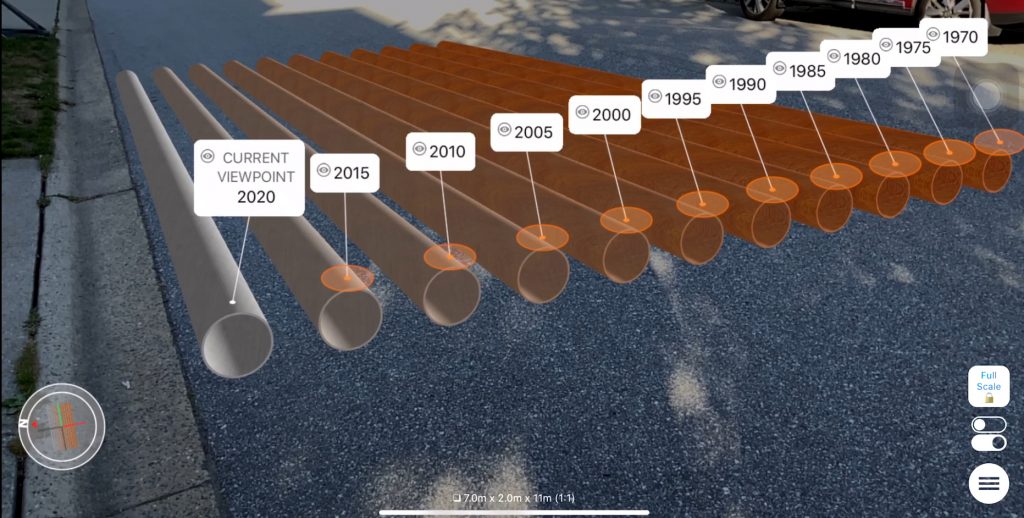

An important step is to style the data by setting colors, transparency, labels, etc. Here, FME gives a lot of flexibility. We can, for instance, set water pipes to blue and natural gas pipes to yellow. Or, we can use textures. For example, we can mix metal and rust textures in different proportions to “age” the pipes to show when they were installed.

In general, AR visualization is a whole new area of exploration – what are the best methods for displaying real data in augmented reality.

Note that at this point, we don’t transform the actual data to AR for any particular location – we may run the transformation for testing purposes over a few representative regions to see whether everything works. We can place the test model on the floor in our office or home, check the look, the size, the orientation, and so on, but the actual models for augmenting the reality will be created only when we request them in the field.

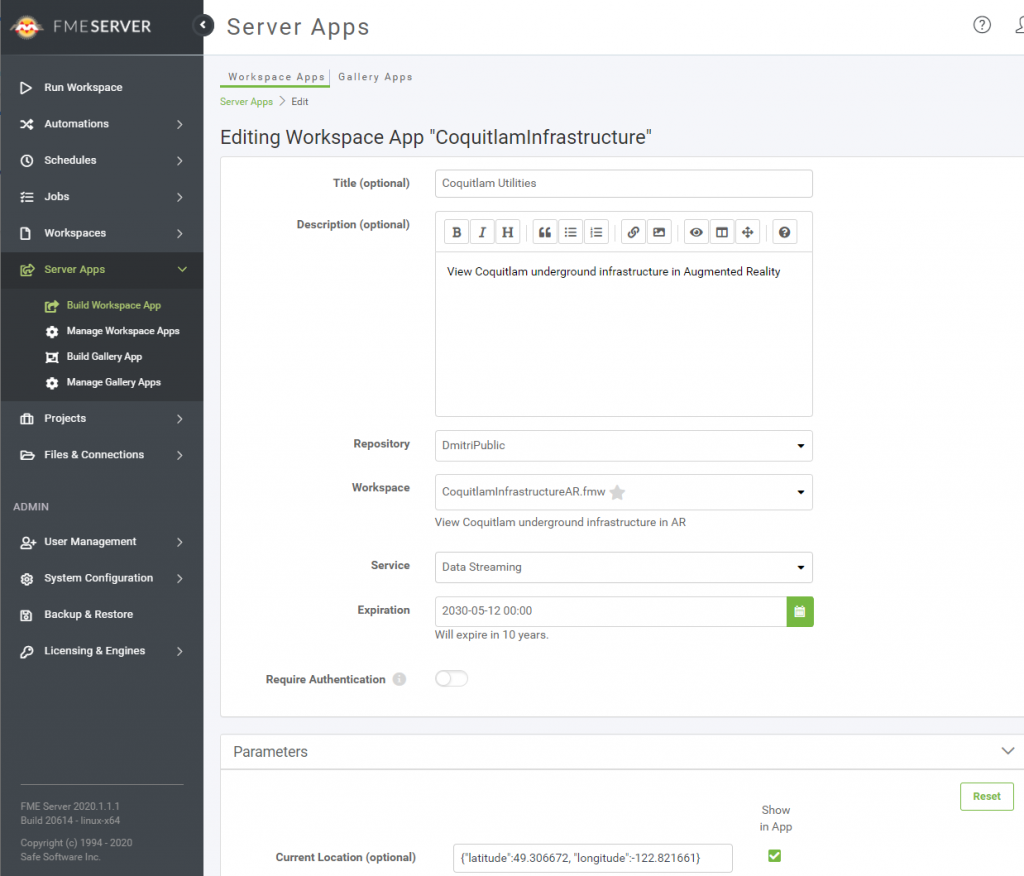

Step 2. Set Up an Online Service

Now that we have an FME Workspace defining the transformation, we need to create a service that will make this transformation available through a mobile device anywhere it might be needed, that is, where we have our data coverage.

We upload the workspace to FME Cloud, set the permissions if necessary, and, for more convenience, create an FME Server App, which is just a URL for a user.

Step 3. Prepare Your Mobile Device

In order to get the AR model created relative to our position, we need to submit our location to FME Cloud. The best way to do this is through FME Data Express, a free app for running workspaces uploaded to FME Server or FME Cloud. Another free app, FME AR, will open the generated AR models on a mobile device or tablet for us. Note that only the iOS version of FME AR supports scale 1:1 and georeferencing, but we are working on bringing the Android version up to speed.

Step 4. Augment the World

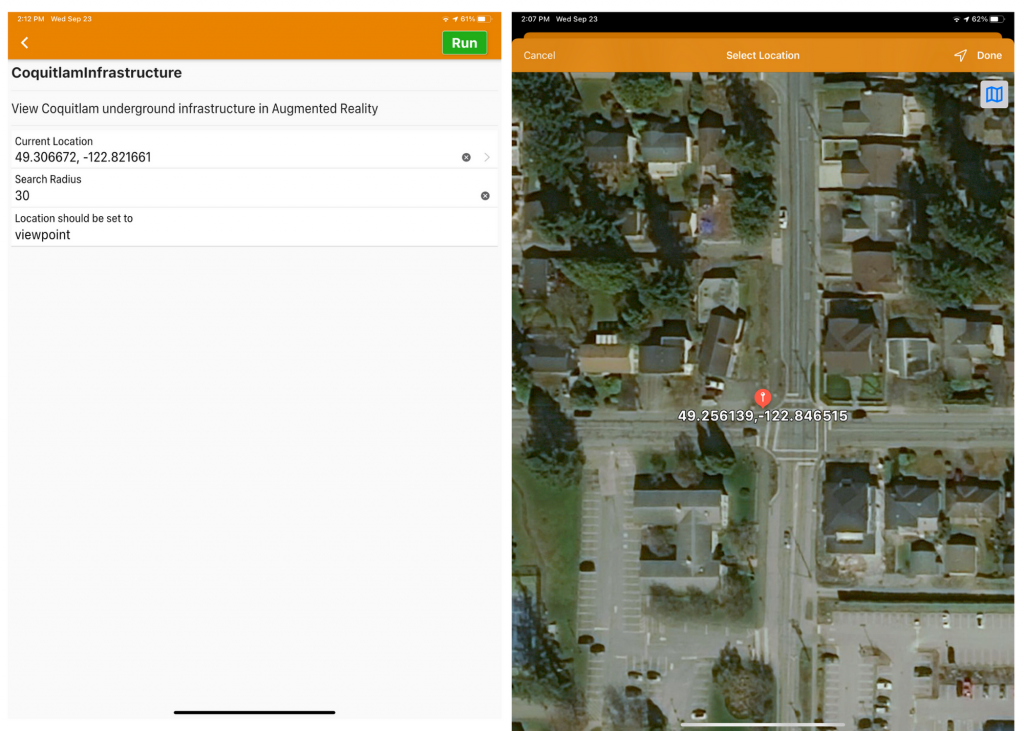

Now we are all set to see the world in a whole new way. Through FME Data Express, we open the service, check our location and adjust it if necessary, set other parameters if they exist (for example, a search radius), and press the “Run” button.

The request will go to FME Cloud and initiate the transformation. Our location will be used for clipping the necessary data from the original database, the workspace will make the AR model with all the symbology we defined, and the model will be sent back to our device, which will open it immediately in FME AR.

FME AR will place the model around the submitted location using the compass in the device, and under ideal conditions, we should see reality augmented with our data.

The GPS and compass precision of a mobile device is usually not high enough for a perfect match, so we may need to slightly adjust the position and rotation of the model. Some clearly visible objects such as hydrants, poles, and manholes can be really helpful during the adjustment step. But once it’s done, we can walk and look around and see the same old reality with our new enriched visual capabilities. This superpower of seeing more is limited only by our imaginations (and a little bit by the data availability).

Conclusion

Location-based augmented reality makes its first steps into the world of geospatial professionals (what do you think about calling it simply geoAR?). The technology is not perfect yet, but it is developing very quickly as more powerful processors, better sensors, and new algorithms improve our AR experience with every new generation of mobile devices. A lot of questions still await their answers. What are the best visualization techniques? How do we show geospatial AR models that are not fixed to the ground? What are the best indoor solutions? Can we reach a centimeter precision for a reliable augmentation of the small objects? I can continue the list, but isn’t this exciting? We can not only be a part of the augmented future, we can also shape it.

The geospatial and automation components of AR are the areas where FME is really strong. There is no limit on where data comes from or how it is transformed – it’s totally up to the users to decide how they want their data to look in the real world.

Today, I shared my vision of geoAR. How would you want to augment your reality?

Dmitri Bagh

Dmitri is the scenario creation expert at Safe Software, which means he spends his days playing with FME and testing what amazing things it can do.