Hi FME’ers,

Late in 2016 I posted to the KnowledgeCentre the idea of a Best Practice analysis tool. The idea was to create a tool that could review a workspace and validate it in terms of the techniques used.

With the help of the FME community, this tool was created and posted to GitHub for download and use by anyone.

However, this type of resource is more flexible as an online service. That way no-one needs to install anything, just use the service.

So I made that service. If you already tried this in my Code Smells workshop, then be sure to note the new email address below. For anyone else, this post describes how to use the service, what you can expect from the report, how it was set up, and how you can contribute.

![]()

The FME Workspace Best Practice Analyzer and Reporting Tool (FWBPAART)

Not the most concise name, but you get the idea. This is a tool for analyzing workspaces. And this service is very simple to use. All you do is:

- Open your email client and create an email

- Add your workspace as an attachment

- Set the subject line it to something like “My Project – Best Analysis Report” (it becomes the report title)

- Send the email to: bestpractice@safe.com

In response you will (very quickly) receive an email report of the workspace’s best practice techniques.

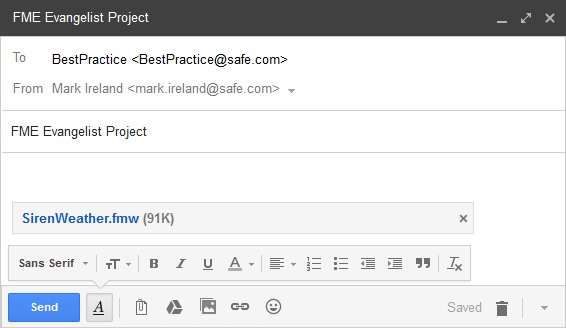

The above is just the report header, by the way. There is a lot more to the reports than that, as we can see below…

![]()

The Best Practice Report

There are multiple sections to the report:

- HEADER: A report header with statistics on the workspace file (size, creation date, etc) plus the FME version the report is generated by.

- WORKSPACE: A report on the workspace in general, such as its version and the Name/Category fields.

- STYLE: A report on the style used in the workspace, in particular annotations and bookmarks.

- DEBUGGING: A report on debugging components (like breakpoints) in the workspace.

- READERS: A report on the workspace’s readers, including version, published parameters, dangling readers, disabled feature types, and excess feature types.

- WRITERS: A report on the workspace’s writers, including version, invalid feature type names, mixed case attributes, and coordinate systems.

- TRANSFORMERS: A report on a workspace’s transformers, including total usage, version, excess scripting transformers, and unpublished parameters.

- PERFORMANCE: A report on performance issues, such as parallel processing, and performance parameters.

- SUMMARY: A summary of the number of warnings and errors in the report.

Each section contains multiple tests, and each test can report a PASS, WARNING, or ERROR. There are so many separate tests that there isn’t space to list them all. To see a full list send an email without an attachment. I set up the system so that no attachment, instead of causing a failure, returns documentation.

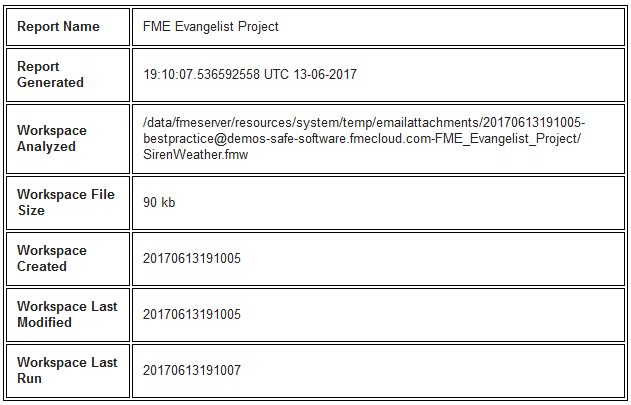

However, let’s look at one example of a test: coordinate systems in a writer.

Coordinate System Test

According to the documentation, this test carries out the following:

- Checks for writers with a coordinate system set

- Checks for readers with a coordinate system set

- Looks for any CoordinateSystemSetter transformers

- Issues a warning if a writer sets a destination coordinate system, with no indication that a reader or transformer provides a source coordinate system.

This is fairly typical of the tests carried out. It doesn’t highlight a specific, 100% guaranteed error, but it shows a weakness of design. Here the issue is that FME might fail to reproject the outgoing data, because there is no guarantee it knows the incoming features’ coordinate system. The source dataset itself might have that information, but we cannot say for sure. More importantly, if the source data is outside of your control, you cannot say for sure either!

There are many other tests that produce a warning, to say that something may be a problem. Only a few tests produce an error for something that is conclusively bad. But even some errors are a matter of opinion; for example, are Inspector transformers bad in a production workspace? I say yes, but you might disagree.

Overall I think any warnings may be ignored. But any errors must be fixed before putting the workspace into production.

Limitations

Note that this tool is still a work in progress, and that there are a few limitations. The biggest one is that we can’t read inside an embedded custom transformer to analyze its contents. Besides that the HTML is also fairly basic and not easy to navigate through. I think we could do a better job there.

There are some more tests we could add too, and some users have suggested we make a scoring system, rather than just warnings and errors.

![]()

How Does FWBPAART Work?

If you’re interested in how the reporting tool works, it’s a simple combination of FME Cloud notifications and a processing workspace.

- Your email is forwarded to BestPractice@demos-safe-software.fmecloud.com (thanks to our IT team for setting that up)

- demos-safe-software.fmecloud.com is an FME Cloud instance with an incoming email notification

- The notification saves the attachments on an incoming email

- The incoming email triggers a notification topic. An analysis workspace connected to that topic runs. It…

- …reads your workspace from the attachments folder, using the FMW (workspace) reader

- …carries out various checks on your workspace to assess its quality

- …creates a HTML report and writes it to a Resources folder

- …generates an outgoing email containing the HTML report

- Completion of the analysis workspace triggers a notification topic

- An outgoing email notification connected to that topic, is fired and sends the return email

It’s not too complex a tool, even though it sounds like it. The notification parts are fairly clear-cut and the analysis workspace – although it is large and uses custom transformers – is very modular, where each test in itself is quite easy to understand.

My Favourite Parts

I want to highlight a few parts of the process that I like or find interesting:

- Because an FME Cloud instance has a domain and a built-in email server, it takes only seconds to set up an incoming email notification. How many seconds? Twenty…

- … so when I send an email to fmeevangelist@demos-safe-software.fmecloud.com, any workspace tagged with the FMEEvangelist topic runs. So quick, so easy.

- It’s not very easy to cooperate on a single FME project, so I split the workspace into several custom transformers, which makes it easier for several users to collaborate.

- There’s a trade-off there. Linked custom transformers add a layer of management to a project, especially when you involve Server. But the way in which it made the project easier to collaborate on, and also drastically reduced the size of the main workspace (helping future scalability), meant the benefits outweighed the cost.

- My favourite test is for writer attributes whose names are a mix of cases; for example you have parkid, PARKNAME, City, PostCode!

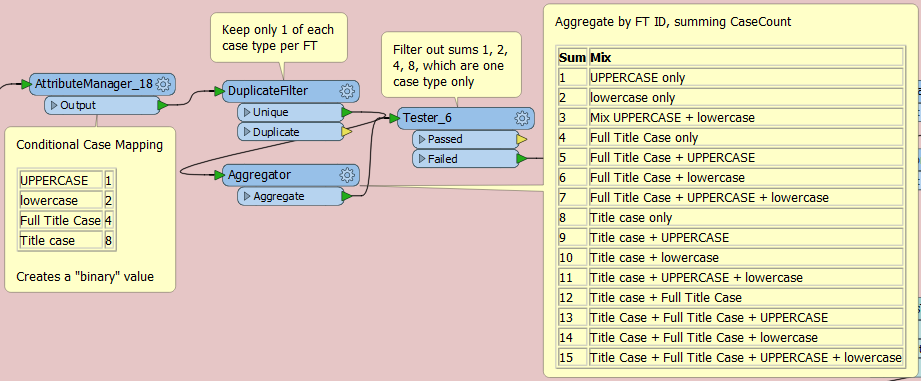

- I just really like this technique, which I guess you could call a form of binary encoding.

- Each attribute name is assessed and given a number of 1 (UPPERCASE), 2 (lowercase), 4 (Full Title Case), or 8 (Title case).

- Duplicate values are removed and the sum of the numbers taken.

- Now I know (for example) if the value is 11, the writer must have a mix of Title case (8) + lowercase (2) + UPPERCASE (1).

- Therefore, values of 1, 2, 4, 8 are a pass (consistent naming) whereas anything else is a warning (may not be consistent).

- The new Project tools in FME Server 2017 makes it really easy to export the setup from one cloud machine and import it to another.

- I’ll add the export file to GitHub, so that you can download and implement your own private version of this tool.

![]()

Why Create FWBPAART?

FME is (I hope you agree) a superb product, and I see it more and more at the centre of system architecture diagrams. For example, from this presentation at the FMEUC comes a typical FME-centric diagram:

But as the projects that FME is used in get larger and larger, FME components scale up to match, and some users find this hard to organize. I think that’s why projects for organizing FME content exist. In particular – just like this Best Practice tool – people are using FME to organize FME!

There are two great examples from the recent FMEUC.

“Hey Dude, Where’s My Workspace”: A project by the City of Zurich that uses FME to catalogue existing FME workspaces, and presents the results through a wonderful web interface:

The “Where Did We Come From” slide is especially relevant: a twelve-year build-up of 1,000+ workspaces, stored in all sorts of locations (shared folders, developer PCs, etc), on all sort of staging environments (development, testing, release, etc), and with various degrees of documentation. It shows users must be organized to scale up to larger FME use.

That project made use of the Workspace Reader (as I did) and that format is covered in more detail in this FMEUC presentation:

Again the idea is to document and catalogue workspaces, and analyze workspace dependencies (which is very important). The part I most like is returning a diagram of inter-dependencies that can be used to fine-tune workspace scheduling.

So perhaps my project is important not just for best practice on individual workspaces (though that’s a very important part), but also as an example of how to use best practice for organizing FME, using FME.

![]()

Summary

If I’ve jumped around different topics in this post, I apologise. In short I created this best practice tool to be a public service, so please use it however you like. Remember, the email address is bestpractice@safe.com and you can also download the source from GitHub to run it locally (there’s no code, just pure FME workspaces and transformers).

But besides good practice in workspaces, this is an example of how we can use FME to organize FME. Remember these techniques when you are scaling up your FME use. And keep a look out as we implement functionality inside FME; like workspace revision control, which I believe is planned for FME Server 2018.

If you would like to contribute to the best practice project, then you could…

- Come up with a better name than FWBPAART! Seriously.

- Add tests or make edits to the core workspace.

- Send me feedback about the report system, or suggest tests that I could add.

I know that not everyone uses GitHub, so if you do want to contribute tests or edits, you can send the files to me and I will commit them on your behalf.

Regards

Thanks

I want to acknowledge folk who contributed ideas and time to this effort, particularly Sigbjorn. At first I didn’t know how he found time. After the FMEUC I realized he only needs 2 hours sleep a night! Also thank you to Martin Koch from whom I took the phrase “using FME to manage FME”.

And if you want to watch other FMEUC Videos about Best Practice, then try these:

Mark Ireland

Mark, aka iMark, is the FME Evangelist (est. 2004) and has a passion for FME Training. He likes being able to help people understand and use technology in new and interesting ways. One of his other passions is football (aka. Soccer). He likes both technology and soccer so much that he wrote an article about the two together! Who would’ve thought? (Answer: iMark)