FME Cloud has been live for over 18 months now, and one of the biggest hurdles customers face is moving their datasets into the cloud—particularly if they are large. Below are the three main data upload challenges we see.

FME Cloud has been live for over 18 months now, and one of the biggest hurdles customers face is moving their datasets into the cloud—particularly if they are large. Below are the three main data upload challenges we see.

Most scenarios we’ve seen involve loading data up to Amazon Web Services (AWS), since FME Cloud runs on AWS, but these techniques also apply to loading data into other cloud platforms such as Microsoft Azure.

Small Datasets Frequently

To upload small datasets at frequent intervals, you can leverage a number of tools provided by AWS and third parties. The following solutions all use HTTP, which should be sufficient unless your internet connection is really unreliable.

- Code: Use the AWS SDK and integrate directly into your application.

- Network drive: Expose an S3 bucket as a local disk and allow users to upload to S3 by simply dropping files in a local folder (e.g. CloudBerry Drive, TNTDrive, ExpanDrive).

- Web app: Amazon has their own web app in the AWS console. It isn’t the most usable, but it does work.

- Client app: Connect to an S3 bucket much like you would an FTP server with easy drag-and-drop file upload (e.g. CyberDuck, CloudBerry Explorer)

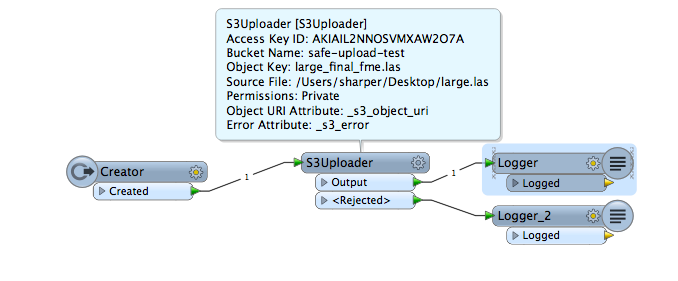

- FME: In FME you can use the S3 transformers to upload and download data. If you have FME Server you can automate the upload of files to S3 by watching local file disks, FTP directories, and URLs.

Large Datasets Infrequently

Here you can use the AWS Import/Export service or the similar service offered by Azure.

How it works: you load your data onto SSD disks and post them to Amazon, then they load your data into a nominated S3 bucket or EBS mount. It is an excellent way to do a bulk upload if you plan on doing change-only updates.

If loading your data over the network would take 7 days or more, definitely consider using AWS Import/Export. First, it’s cost effective, as you don’t have to pay for bandwidth costs, only a handling fee and $2.49 per loading hour. Second, it’s secure: you can use pin code and software encryption to ensure your data is secure when it is in transit. Finally, your data is guaranteed to load within 1 business day of receiving it, so it is a relatively fast way to load large datasets.

Large Datasets Frequently

This relatively common scenario is the trickiest of all three. Often people leverage the scalability and cost of the cloud to process large data volumes, and if the data originates from outside the cloud, they need to push it up in an automated way. Examples we run into involve LiDAR and Raster data. One client had to upload 12GB of satellite data every 12 hours, and it was time sensitive so it needed to be uploaded as quickly as possible.

To upload large volumes of data the standard tools AWS offers are often too slow, even if you have a fast internet connection, as they all rely on HTTP. There is overhead with HTTP because it relies on the TCP protocol, which simply wasn’t designed for moving large datasets across the WAN.

Accelerated file transfer solutions have come to market that leverage UDP, which can facilitate much greater throughput by using more of your available bandwidth and is less affected by network overhead. Several accelerated file transfer solutions exist. This list is by no means exhaustive, but here are a few:

- Tsunami UDP is an open source solution. AWS covers it on their blog.

- Aspera is, I would say, the most established commercial offering. They have created their own protocol called FASP, which is based on UDP. You configure a server on AWS with their software on it that is connected to either S3 or an EBS volume. You send data to the server using one of their client applications (or SDKs to integrate with your applications), which uses the FASP protocol. Once there the Aspera server streams the data directly on to the AWS service you are uploading to. For the version I trialled I had to launch my own AWS instance and configure the software on it. Read more here.

- FileCatalyst works in a similar way. They use UDP accelerated upload to an AWS instance you configure with their software running on it. Again, nothing is stored locally and the files are streamed through the server directly to S3. For the version I trialled I had to launch my own AWS instance and configure the software on it.

- Signiant is a SaaS offering that allows you to upload files to AWS S3 without hosting or configuring your own server in the cloud. You just download a command line tool and start uploading. They also have SDK to integrate their technology with your own applications.

Comparison of data upload tools

I ran a variety of tests uploading data to S3 using both commercial and open source tools. Two datasets were uploaded: one was a 10GB single file, the other a series of folders with 21,000 images in it. The uploads were tested on two internet connections: one a fibre connection with upload speeds averaging around 170Mbps, the other a broadband connection with an average upload of 2.67 Mbps. Here are the results.

| FME (HTTP) |

CyberDuck (HTTP) |

Aspera (FASP) |

|

|---|---|---|---|

| Upload Speed 170Mbps | |||

| Total 10GB – Single file | 9m 24s | Failed 443 failed to respond error. |

9m 5s 159.1 Mbps |

| Total 5GB – 21,000 web map tiles | 12m 31s | 1h 24m | 57m 36s 14.3 Mbps |

| Upload Speed 2.76 Mbps | |||

| Total 10GB – Single file | FailedTimeout Issue | 9h 57m | 9h 36m 2.5 Mbps |

| Total 6GB – 21,000 web map tiles | FailedTimeout Issue | 7h 6m | 5h 41m 2.44 Mbps |

Speed

Aspera comes out on top in most speed tests—although the speed gains were not as large as I expected. FME was not far behind Aspera on uploading the single file and it was faster at uploading the 21,000 map tiles. This is likely because we just switched to the new SDK and we now leverage a folder upload API call. (Note: I also had a note from Aspera support highlighting some tweaks that can be made to improve the upload speed, but haven’t had chance to try it.) FME also had timeout issues when I uploaded on the slow internet connection… we will get this fixed.

I think FME was so close to Aspera (and CyberDuck in some tests) because I was testing in optimal network conditions. Data was getting copied from Vancouver to Virginia so the data stayed on the North American continent, likely resulting in low latency and reduced packet loss. Indeed, Aspera logged the network delay as being only 67ms. If you try to upload data in more marginal network conditions or to a server on another continent I think the speed difference between solutions using HTTP and UDP will be more apparent.

Ease of use

Aspera is a bit fiddly to set up (although they have excellent support), but it is a comprehensive solution and has a lot of very useful features. To set up you have to run your own AWS instance and install their software on it. So in that sense it is another piece of infrastructure you need to monitor and maintain. The features I found most useful included a dashboard giving an overview of current and recently completed uploads, granular control on how much bandwidth to assign to the upload and the ability to review uploads after they had completed with statistics on speed of upload and when it was delivered.

I also had a brief look at FileCatalyst and it was simple to set up and configure but the available supporting tools weren’t quite as rich as Aspera. The Web UI also wasn’t quite as usable. It uses the same design pattern with their software running on an AWS EC2 instance that you manage.

FME is also easy to use with a drag-and-drop interface. The workflow I built to upload to S3 took me less than five minutes. We also have tools for uploading, deleting, downloading and extracting meta data from S3.

CyberDuck is just like an FTP client where you drag and drop files you want to upload. Once connected to a bucket you can also delete and move files.

What accelerated file transfer solutions have to offer

I started this review thinking the performance increases offered by accelerated file transfer solutions would be the main benefit. It turns out the greatest benefit was reliability, and essentially turning file upload into a fault-tolerant component. Often we design complicated fault-tolerant architectures in the cloud, leveraging all AWS has to offer to ensure we have a stable, reliable application. However, such a design is only as strong as the weakest link. If you are relying on data being uploaded to the cloud to trigger a workflow, my bet is that it is likely to be the weakest link. If uploading files is an integral part of your workflow, I suggest taking a look at the commercial accelerated file transfer solutions.

For most users, the performance you get from FME will be sufficient for uploading files to the cloud. If you are uploading incredibly large files over a poor connection you may run into issues, but I would start my benchmarking here. Give FME a try and let us know what you think. What is your biggest data upload challenge?

Stewart Harper

Stewart is the Technical Director of Cloud Applications and Infrastructure at Safe. When he isn’t building location-based tools for the web, he’s probably skiing or mountain biking.