Fellow Safer Drew Rifkin recently came back from the NSGIC 2011 Annual Conference touting NSGIC’s new Geospatial Data Sharing Guidelines for Best Practices document. While Safe Software has been involved in many of these initiatives over our nearly 20 year history, I was surprised to realize that I’ve never seen such a cogent set of arguments in one place for the open sharing of geospatial data.

Fellow Safer Drew Rifkin recently came back from the NSGIC 2011 Annual Conference touting NSGIC’s new Geospatial Data Sharing Guidelines for Best Practices document. While Safe Software has been involved in many of these initiatives over our nearly 20 year history, I was surprised to realize that I’ve never seen such a cogent set of arguments in one place for the open sharing of geospatial data.

The document itself is clearly targeted at the much broader audience than just the geospatial professionals who normally rub shoulders to talk about these sorts of things. For example, I may start using the definition it provides for “Geospatial Data” the next time I’m asked at a dinner party what I do for a living.

Geospatial data identify and relate the geographic location of features and boundaries. They are stored in databases that include descriptive attribute information about locations, allowing the information to be mapped. Geospatial data enable government, consumer and business applications. These data are accessed, manipulated or analyzed through Geographic Information Systems (GIS).

From there, the document goes on to provide a solid argument as to why the open sharing of geospatial data is in the best interest of the common good, and why policies that limit this, either by imposing fees or downright restricting such sharing, are counterproductive. James Fee’s experiences a couple of years ago with his local government, while doing a school project, highlight the downside of such policies.

I remember in the earlier years of Safe when some of our government customers held their data tightly and how different groups within the same government had to pay each other for their data. I agree with NSGIC that these efforts that were spent negotiating and accounting for those transactions could have been more profitably spent elsewhere.

3 Myths about the Open Sharing of Geospatial Data

What packs particular punch in this document is the debunking of a few myths around open data.

- Myth #1 is that organizations can pay for GIS operations through data charges – our experiences match NSGICs claim that the effort to create the infrastructure to actually take payment can easily outweighthe true income that would be realized.

- Myth #2 relates to the potential security risk of making data available. NSGIC points out that geographic reality is mostly already there for the viewing. Someone who wants to know where the substations are can just go see them and make their own map if so inclined.

- Myth #3 is about the issue of perceived liability from the use of open data. NSGIC makes solid arguments that the value of sharing far outweighs any risk.

The document ends with a solid charge for decision makers:

Now is the time to change existing policies that are outdated or based on incorrect assumptions. Tremendous value can be realized by all organizations through the open sharing of geospatial data. NSGIC calls on government administrators, geospatial professionals, and concerned citizens to further advance the use of important geospatial data assets and to ensure that they remain freely accessible.

If you’re a decision maker in a government at any level, in any country, this document is a must read. And if you’re a consumer of spatial data provided to you by governments, you owe it to yourself to be up-to-speed on the latest thinking in the data sharing arena. Because of the way it was written, it’s also ideal for passing on to the high-level decision makers you interact with.

As someone who has seen this debate unfold over the years** in Canada to the point where now open data has really got legs at all levels (thanks Vancouver for your leading role), I say a big THANK YOU to NSGIC for gathering together and presenting such a solid set of arguments around these issues to help decision makers everywhere.

You can read NSGIC’s Geospatial Data Sharing Guidelines for Best Practices here.

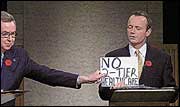

** Indeed, in the year 2000, I was invited to participate in a roundtable discussion on the issue of open data and I recall holding up a sign saying “No Two Tier Data”, with about the same impact as the similar stunt that had been previously done during an all leaders debate in a Canadian federal election. #thingsnottodoinadebate

** Indeed, in the year 2000, I was invited to participate in a roundtable discussion on the issue of open data and I recall holding up a sign saying “No Two Tier Data”, with about the same impact as the similar stunt that had been previously done during an all leaders debate in a Canadian federal election. #thingsnottodoinadebate

Dale Lutz

Dale is the co-founder and VP of Development at Safe Software. After starting his career working spatial data (ranging from icebergs to forest stands) for many years, he and other co-founder, Don Murray, realized the need for a data integration platform like FME. His favourite TV show is Star Trek, which inspired the names for most of the meeting rooms and common areas in the Safe Software office. Dale is always looking to learn more about the data industry and FME users. Find him on Twitter to learn more about what his recent discoveries are!